Pilot

The user selects note patterns from existing compositions, and morphs between them in real time to produce new variations (here note pattern means a MIDI Clip in a Live set).

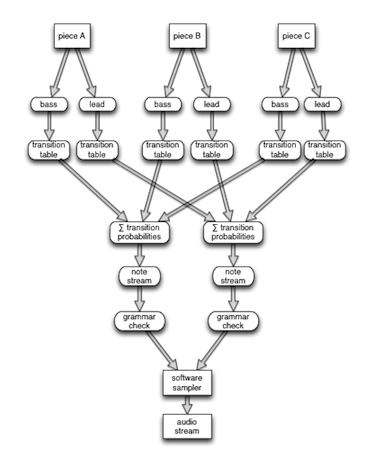

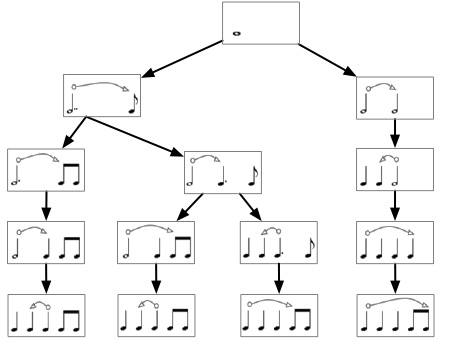

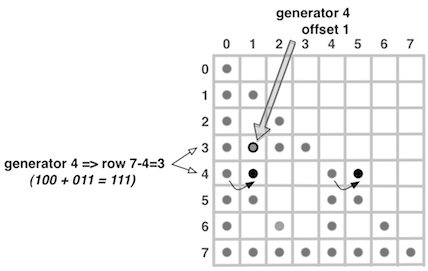

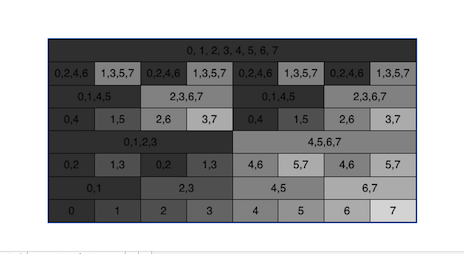

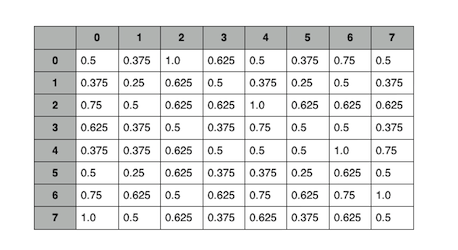

Pilot analyzes the selected patterns and regenerates their most basic, generic building blocks. Some building blocks are audible on the musical surface; others exist only as deeper scaffolding for those surface patterns. Combinations of these building blocks have different probabilities for each composition. Pilot allows the user to control which combinations of building blocks will prevail in the musical output.

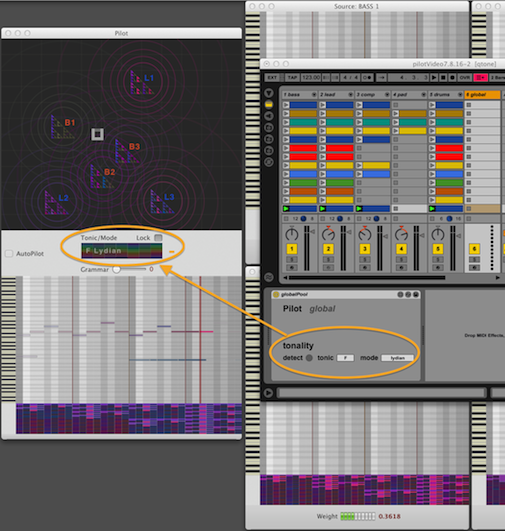

Pilot is implemented as custom Max-for-Live devices and Max/MSP patches/externals networked to a MacOS Obj-C application via OSC.

Shapes on a plane

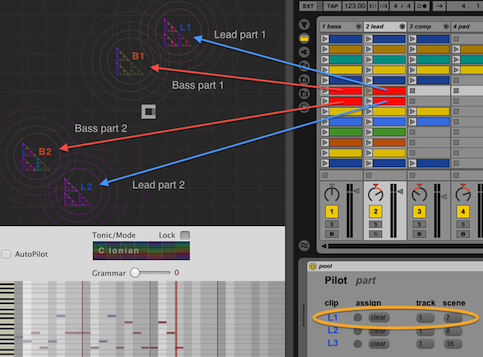

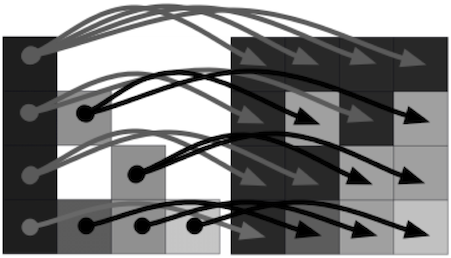

The user selects MIDI Clips from a Live set and positions them on a plane that represents a 2D musical landscape. Pilot analyzes each note pattern placed on that landscape.

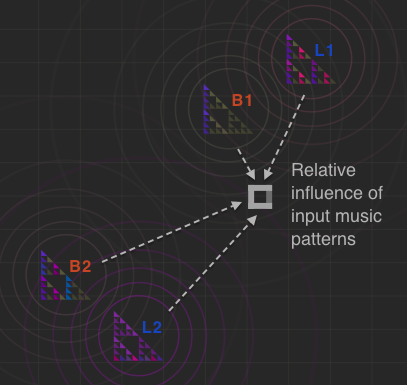

During playback the user creates new music by click/dragging the pointer on the landscape. Each location generates a stream of notes based on the relative distance to each music input.

The input note patterns can themselves also be moved around to warp the musical landscape in real time. The distance between the pointer and each icon determines how much influence that note pattern will have on each generated melody. Dragging the pointer from one clip to another will gradually morph the generated notes between the note patterns in those those clips.

Navigate musical patterns

Original music is created by morphing between riffs that have been analyzed by Pilot. As Live plays, Pilot generates note patterns that are shaped by dragging the pointer among MIDI clips positioned on the plane.

As an example, the user can select three bass MIDI clips on one Live track, and three lead MIDI clips on another track, Each clip is selected by clicking on the clip in Live and then hitting one of the assignment buttons on the Pilot device. Three bass icons and three lead icons will then appear on the landscape, and their icons display the note analysis performed by Pilot.

During playback Pilot morphs between the lead clips on one track, and between the bass clips on another track, calculating two note streams in parallel within a common harmony and tempo determined by the Live scene.

Steerable generative algorithm

Integration into Live scenes

Pilot dissects and regenerates the music in a strictly organic fashion, without patching together prefab musical figures, transitions, rules, etc. The only musical building blocks are those that result purely from the generative process.

It is however designed to coexist with static MIDI clips on other tracks in a Live scene, and to respect the overall harmony and tempo of that scene. In fact the generated note streams automatically adjust key and mode in order to match the currently playing scene.

Pilot post-processes the generative output, applying grammatical rules to the resulting note patterns in order to maximize musical coherence within the target harmony. The user controls the degree to which these grammatical rules are imposed on the final output.

Motivations, and alternate implementations

The goal is to interbreed musical snippets from previous compositions, in order to use note structures to warp each other, and to generate new material. And to then feed newly generated compositions back in as inputs for further music generation, in order to evolve a library of dynamic music patterns and pieces that exist within an actual musical genealogy.

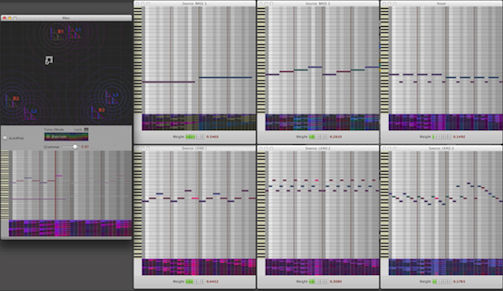

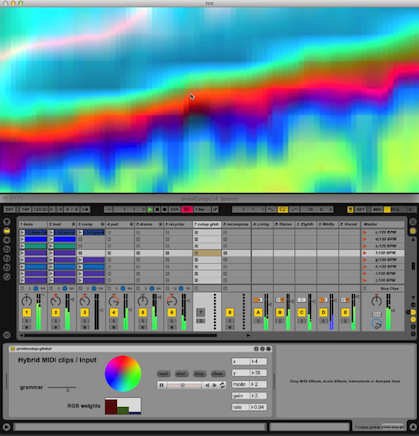

Alternate UI's have also been implemented to control these algorithms; one interface uses RGB values in images and videos. Jitter output is shown here controlling musical input weights based on the changing RGB values under the cursor.

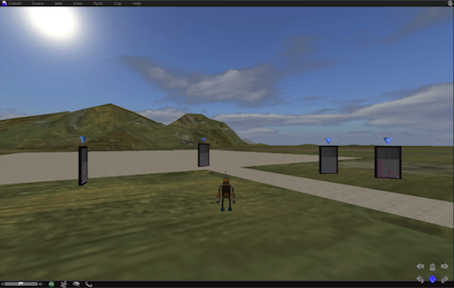

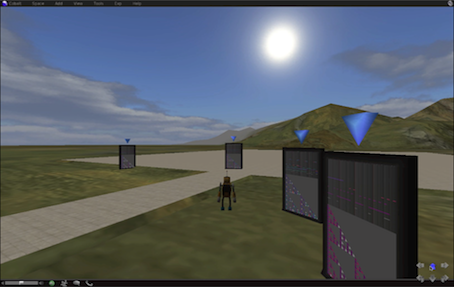

Multi-user domains provide settings where collective musical influences and actions come into play, such as games and installations. The images below show an OpenCobalt (Squeak/Croquet) environment that uses OSC to communicate with the Pilot Max-for-Live devices.

Please see here for additional future directions.

External application

This project is a further stage of the work previously described here as the Replayer project. In order to provide data visualization, note editing, and faster performance, the C-based functionality has been moved from a Max external to a separate MacOS application, which uses OSC to communicate with Max for Live.